Visibility & control

See exactly what's happening at every step of your agent. Steer your agent to accomplish critical tasks the way you intended.

LangSmith Observability gives you complete visibility into agent behavior with tracing, real-time monitoring, alerting, and high-level insights into usage.

.svg)

.svg)

.svg)

.svg)

.svg)

%201.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

%201.svg)

.svg)

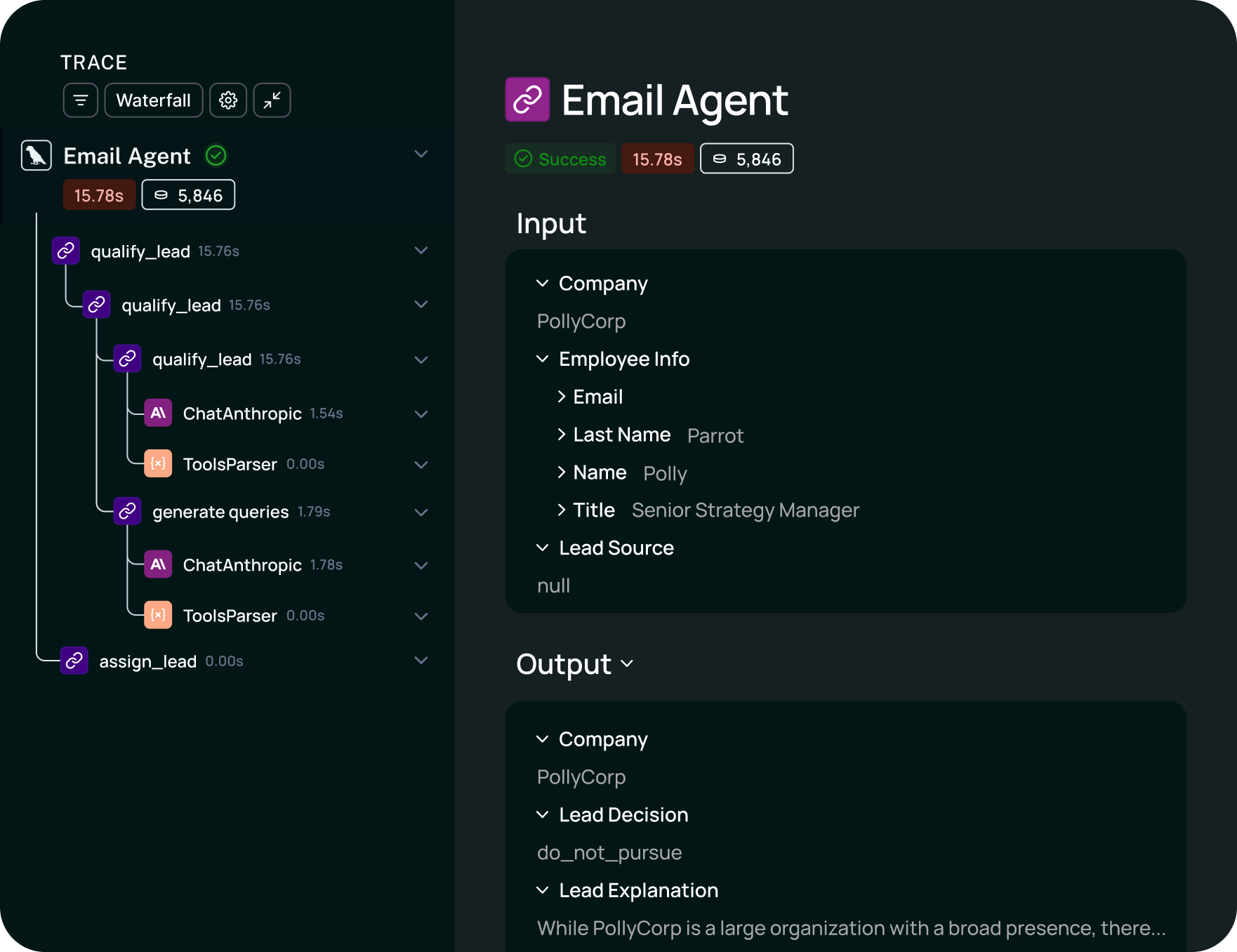

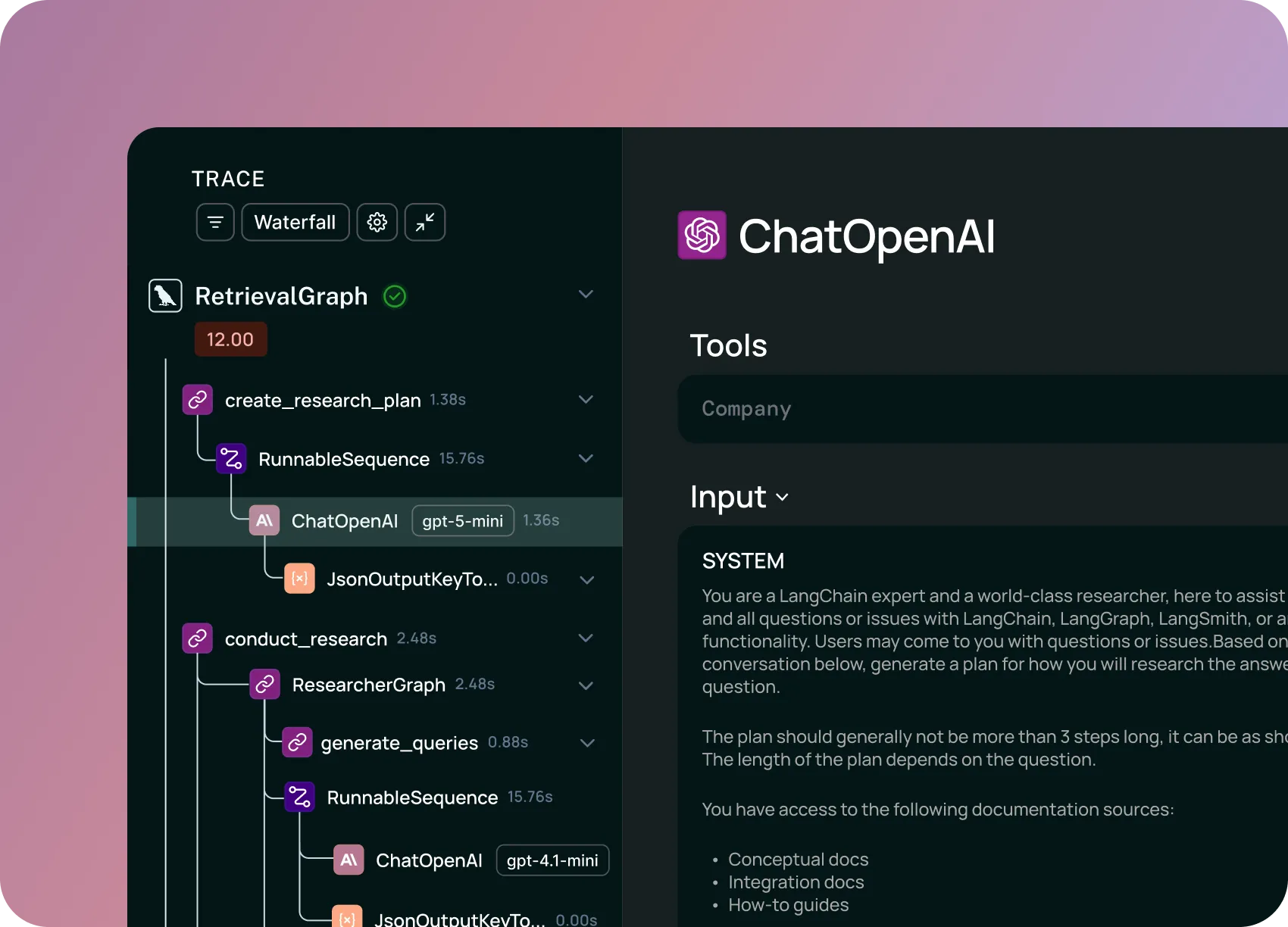

Quickly debug and understand non-deterministic LLM app behavior with tracing. See what your agent is doing step by step —then fix issues to improve latency and response quality.

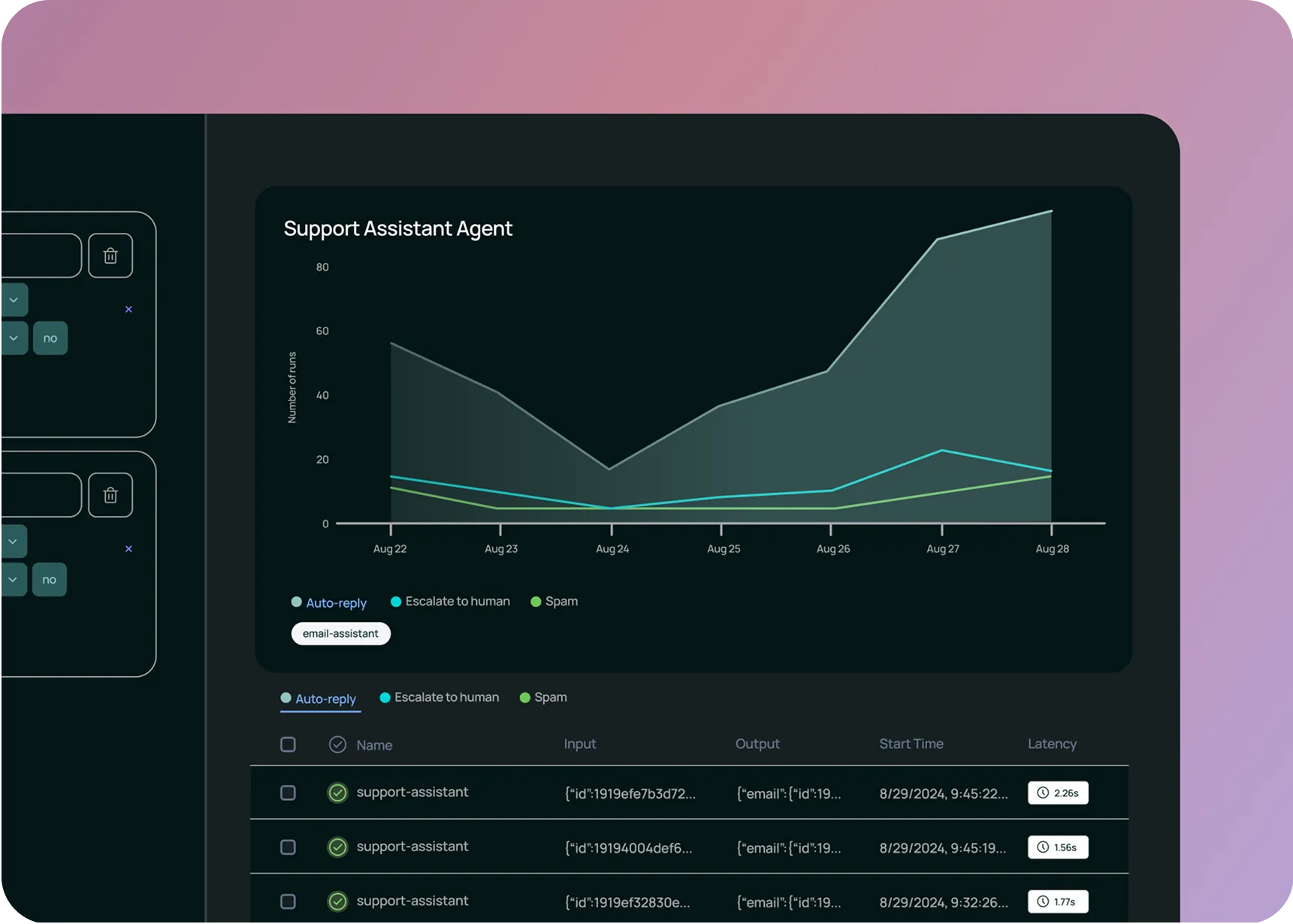

Connect with an expertTrack business-critical metrics like costs, latency, and response quality with live dashboards. Get alerts when issues happen and drill into the root cause.

Get started now

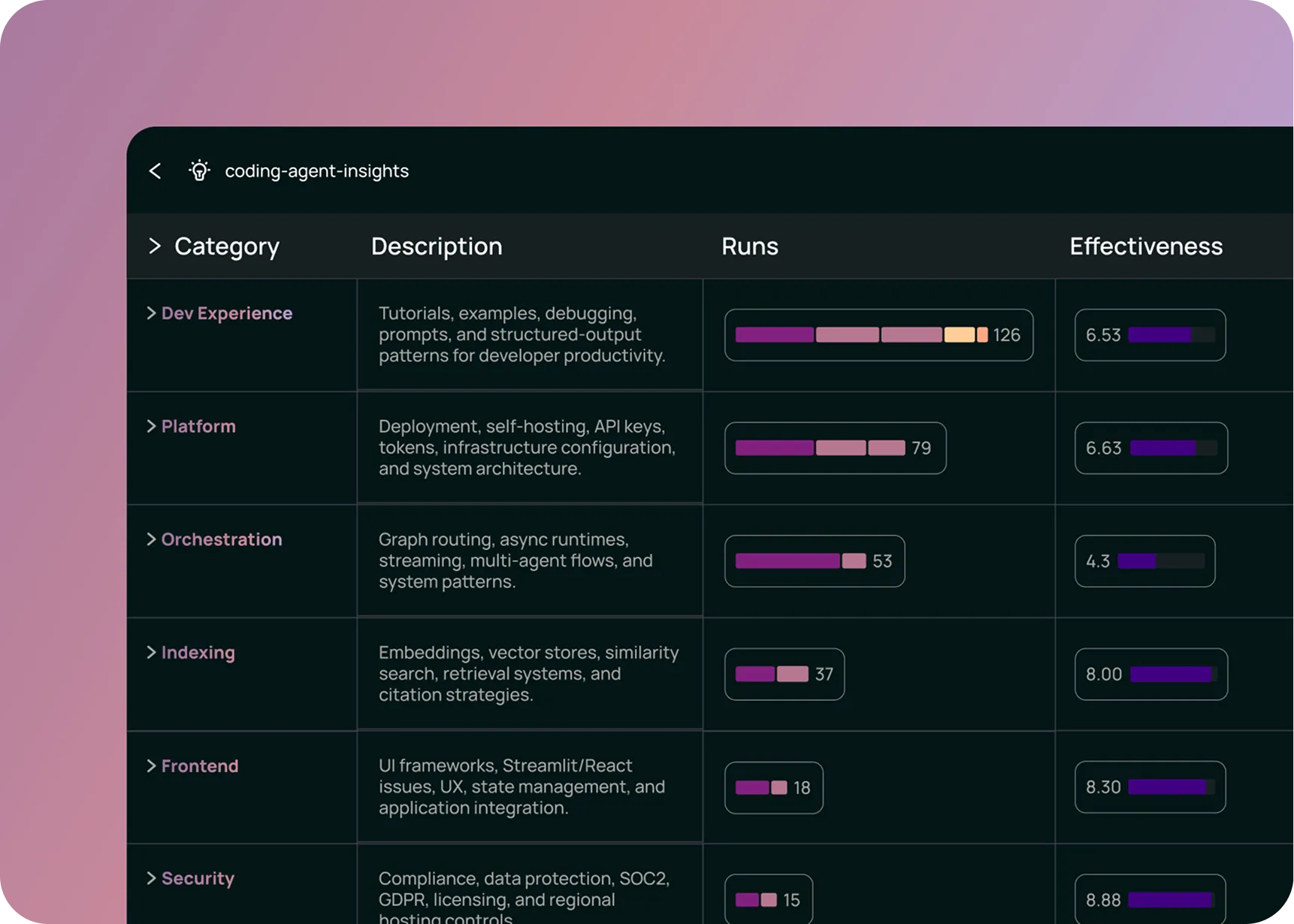

See clusters of similar conversations to understand what users actually want and quickly find all instances of similar problems to address systemic issues.

See it in action

See exactly what's happening at every step of your agent. Steer your agent to accomplish critical tasks the way you intended.

Rapidly move through build, test, deploy, learn, repeat with workflows across the entire agent engineering lifecycle.

.svg)

Ship at scale with agent infrastructure designed for long-running workloads and human oversight.

Keep your current stack. LangSmith works with your preferred open-source framework or custom code.

LangSmith works with any framework. If you're already using LangChain or LangGraph, just set one environment variable to get started with tracing your AI application.